SRFormerLight_SRx2_DIV2K

Official paper pretrain model

SRFormer: Permuted Self-Attention for Single Image Super-Resolution

Yupeng Zhou 1, Zhen Li 1, Chun-Le Guo 1, Song Bai 2, Ming-Ming Cheng 1, Qibin Hou 1

1TMCC, School of Computer Science, Nankai University

2ByteDance, Singapore

arXiv

The official PyTorch implementation of SRFormer: Permuted Self-Attention for Single Image Super-Resolution (arxiv). SRFormer achieves state-of-the-art performance in

classical image SR lightweight image SR real-world image SR

The results can be found here.

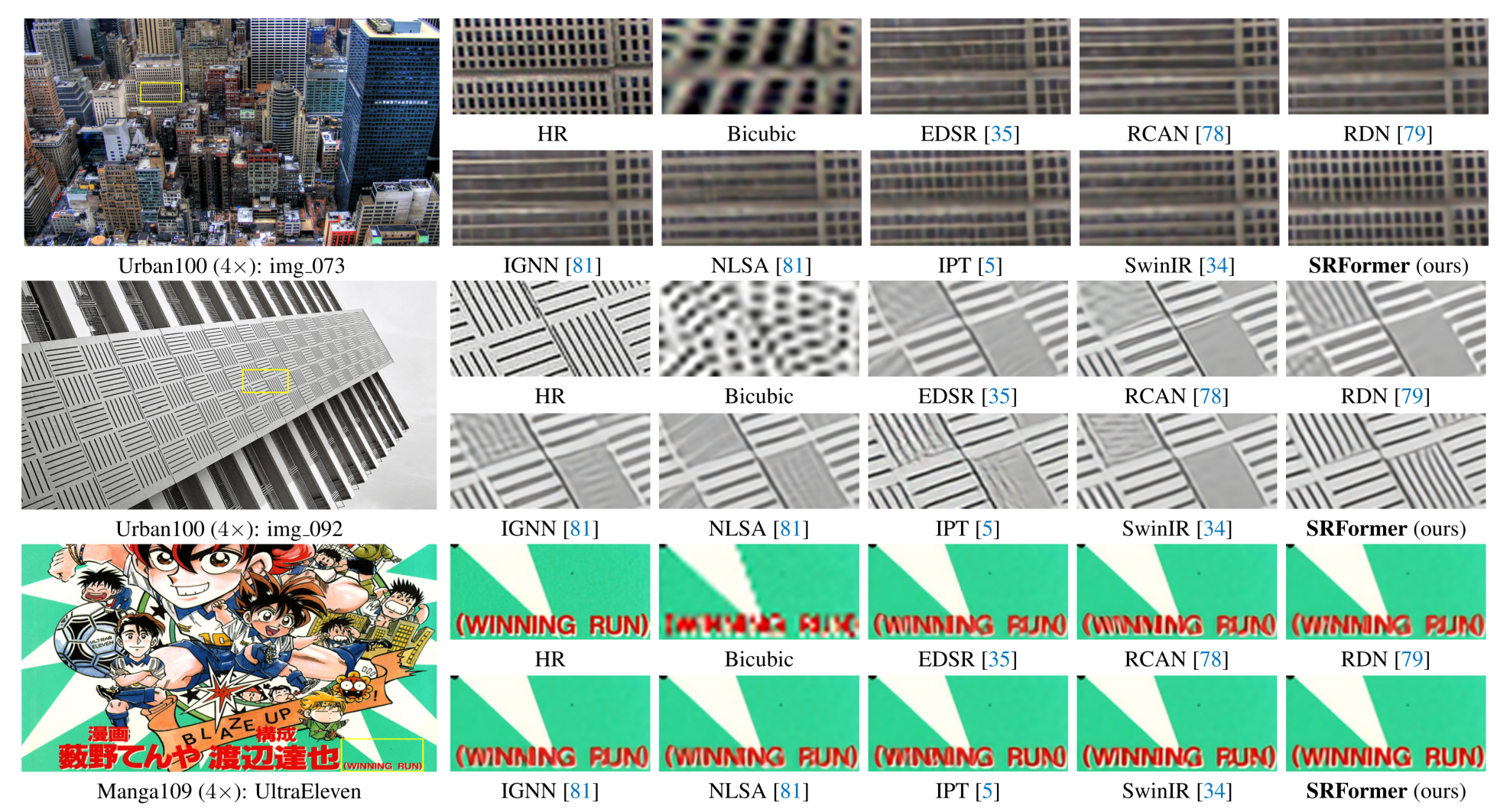

Abstract: In this paper, we introduce SRFormer, a simple yet effective Transformer-based model for single image super-resolution. We rethink the design of the popular shifted window self-attention, expose and analyze several characteristic issues of it, and present permuted self-attention (PSA). PSA strikes an appropriate balance between the channel and spatial information for self-attention, allowing each Transformer block to build pairwise correlations within large windows with even less computational burden. Our permuted self-attention is simple and can be easily applied to existing super-resolution networks based on Transformers. Without any bells and whistles, we show that our SRFormer achieves a 33.86dB PSNR score on the Urban100 dataset, which is 0.46dB higher than that of SwinIR but uses fewer parameters and computations. We hope our simple and effective approach can serve as a useful tool for future research in super-resolution model design. Our code is publicly available at https://github.com/HVision-NKU/SRFormer.

Contents

Installation & Dataset Training Testing Results Pretrain Models Citations License and Acknowledgement

Installation & Dataset

python 3.8 pyTorch >= 1.7.0

cd SRFormer pip install -r requirements.txt python setup.py develop

Dataset

We use the same training and testing sets as SwinIR, the following datasets need to be downloaded for training. Task Training Set Testing Set classical image SR DIV2K (800 training images) or DIV2K +Flickr2K (2650 images) Set5 + Set14 + BSD100 + Urban100 + Manga109 lightweight image SR DIV2K (800 training images) Set5 + Set14 + BSD100 + Urban100 + Manga109 real-world image SR DIV2K (800 training images) +Flickr2K (2650 images) + OST (10324 images for sky,water,grass,mountain,building,plant,animal) RealSRSet+5images Training

Please download the dataset corresponding to the task and place them in the folder specified by the training option in folder /options/train/SRFormer Follow the instructions below to train our SRFormer.

train SRFormer for classical SR task

./scripts/dist_train.sh 4 options/train/SRFormer/train_SRFormer_SRx2_scratch.yml ./scripts/dist_train.sh 4 options/train/SRFormer/train_SRFormer_SRx3_scratch.yml ./scripts/dist_train.sh 4 options/train/SRFormer/train_SRFormer_SRx4_scratch.yml

train SRFormer for lightweight SR task

./scripts/dist_train.sh 4 options/train/SRFormer/train_SRFormer_light_SRx2_scratch.yml ./scripts/dist_train.sh 4 options/train/SRFormer/train_SRFormer_light_SRx3_scratch.yml ./scripts/dist_train.sh 4 options/train/SRFormer/train_SRFormer_light_SRx4_scratch.yml

Testing

test SRFormer for classical SR task

python basicsr/test.py -opt options/test/SRFormer/test_SRFormer_DF2Ksrx2.yml python basicsr/test.py -opt options/test/SRFormer/test_SRFormer_DF2Ksrx3.yml python basicsr/test.py -opt options/test/SRFormer/test_SRFormer_DF2Ksrx4.yml

test SRFormer for lightweight SR task

python basicsr/test.py -opt options/test/SRFormer/test_SRFormer_light_DIV2Ksrx2.yml python basicsr/test.py -opt options/test/SRFormer/test_SRFormer_light_DIV2Ksrx3.yml python basicsr/test.py -opt options/test/SRFormer/test_SRFormer_light_DIV2Ksrx4.yml

Results

We provide the results on classical image SR, lightweight image SR, realworld image SR. More results can be found in the paper. The visual results of SRFormer will upload to google drive soon.

Classical image SR

Results of Table 4 in the paper Results of Figure 4 in the paper

Lightweight image SR

Results of Table 5 in the paper Results of Figure 5 in the paper

Model size comparison

Results of Table 1 and Table 2 in the Supplementary Material

Realworld image SR

Results of Figure 8 in the paper

Pretrain Models

Pretrain Models can be download from google drive. To reproduce the results in the article, you can download them and put them in the /PretrainModel folder. Citations

You may want to cite:

@article{zhou2023srformer, title={SRFormer: Permuted Self-Attention for Single Image Super-Resolution}, author={Zhou, Yupeng and Li, Zhen and Guo, Chun-Le and Bai, Song and Cheng, Ming-Ming and Hou, Qibin}, journal={arXiv preprint arXiv:2303.09735}, year={2023} }

License and Acknowledgement

This project is released under the Apache 2.0 license. The codes are based on BasicSR, Swin Transformer, and SwinIR. Please also follow their licenses. Thanks for their awesome works.

| Architecture | SRFormer |

|---|---|

| Scale | 2x |

| Color Mode | |

| License | Apache-2.0 Private use Commercial use Distribution Modifications Credit required State Changes No Liability & Warranty |

| Date | 2022-11-24 |