Notice

OpenModelDB is still in alpha and actively being worked on. Please feel free to share your feedback and report any bugs you find.

The best place to find AI Upscaling models

OpenModelDB is a community driven database of AI Upscaling models. We aim to provide a better way to find and compare models than existing sources.

Found 668 models

SPAN

1x

StarSample V2.0 Lite NS

by .derpy.

This is a model for the restoration of My Little Pony: Friendship is Magic, however it also works decently well on similar art.

V2.0 greatly improves upon V1.0's dataset in every way, taking models from (realistically) only being viable at 1x, to now being far more competent at 2x, more so for the models trained with heavier architectures in this release.

Improvements come as a significantly better understanding of compressions, and partly architecturally/partly dataset improved handling of details and overall understanding of content, leading to less artifacting and "AI smudging". The dataset takes from a larger variety of sources, despite being smaller than V1.0 (when tiled V1.0 would be 71,876 pairs), due to being filtered for IQA scores and detail density. It also contains many thousands of image pairs manually created to cover areas where there wasn't sufficient information.

This release also includes "NS", or "No Scale" models, which are a better representation of my initial goal with StarSample, and (StarSample V2.0 NS) should provide great 1x restoration results with little apparent artifacting, even where the heavier 2x models can fail due to having to increase resolution.

- 2x StarSample V2.0 HQ — _(HAT-L)_

- 2x StarSample V2.0 — _(ESRGAN)_

- 2x StarSample V2.0 Lite — _(SPAN-S)_

- 1x StarSample V2.0 NS — _(ESRGAN)_

- 1x StarSample V2.0 Lite NS — _(SPAN-S)_ — **THIS MODEL**

Github Release

ESRGAN

1x

StarSample V2.0 NS

by .derpy.

This is a model for the restoration of My Little Pony: Friendship is Magic, however it also works decently well on similar art.

V2.0 greatly improves upon V1.0's dataset in every way, taking models from (realistically) only being viable at 1x, to now being far more competent at 2x, more so for the models trained with heavier architectures in this release.

Improvements come as a significantly better understanding of compressions, and partly architecturally/partly dataset improved handling of details and overall understanding of content, leading to less artifacting and "AI smudging". The dataset takes from a larger variety of sources, despite being smaller than V1.0 (when tiled V1.0 would be 71,876 pairs), due to being filtered for IQA scores and detail density. It also contains many thousands of image pairs manually created to cover areas where there wasn't sufficient information.

This release also includes "NS", or "No Scale" models, which are a better representation of my initial goal with StarSample, and (StarSample V2.0 NS) should provide great 1x restoration results with little apparent artifacting, even where the heavier 2x models can fail due to having to increase resolution.

- 2x StarSample V2.0 HQ — _(HAT-L)_

- 2x StarSample V2.0 — _(ESRGAN)_

- 2x StarSample V2.0 Lite — _(SPAN-S)_

- 1x StarSample V2.0 NS — _(ESRGAN)_ — **THIS MODEL**

- 1x StarSample V2.0 Lite NS — _(SPAN-S)_

Github Release

ESRGAN

2x

StarSample V2.0

by .derpy.

This is a model for the restoration of My Little Pony: Friendship is Magic, however it also works decently well on similar art.

V2.0 greatly improves upon V1.0's dataset in every way, taking models from (realistically) only being viable at 1x, to now being far more competent at 2x, more so for the models trained with heavier architectures in this release.

Improvements come as a significantly better understanding of compressions, and partly architecturally/partly dataset improved handling of details and overall understanding of content, leading to less artifacting and "AI smudging". The dataset takes from a larger variety of sources, despite being smaller than V1.0 (when tiled V1.0 would be 71,876 pairs), due to being filtered for IQA scores and detail density. It also contains many thousands of image pairs manually created to cover areas where there wasn't sufficient information.

This release also includes "NS", or "No Scale" models, which are a better representation of my initial goal with StarSample, and (StarSample V2.0 NS) should provide great 1x restoration results with little apparent artifacting, even where the heavier 2x models can fail due to having to increase resolution.

- 2x StarSample V2.0 HQ — _(HAT-L)_

- 2x StarSample V2.0 — _(ESRGAN)_ — **THIS MODEL**

- 2x StarSample V2.0 Lite — _(SPAN-S)_

- 1x StarSample V2.0 NS — _(ESRGAN)_

- 1x StarSample V2.0 Lite NS — _(SPAN-S)_

Github Release

HAT

2x

StarSample V2.0 HQ

by .derpy.

This is a model for the restoration of My Little Pony: Friendship is Magic, however it also works decently well on similar art.

V2.0 greatly improves upon V1.0's dataset in every way, taking models from (realistically) only being viable at 1x, to now being far more competent at 2x, more so for the models trained with heavier architectures in this release.

Improvements come as a significantly better understanding of compressions, and partly architecturally/partly dataset improved handling of details and overall understanding of content, leading to less artifacting and "AI smudging". The dataset takes from a larger variety of sources, despite being smaller than V1.0 (when tiled V1.0 would be 71,876 pairs), due to being filtered for IQA scores and detail density. It also contains many thousands of image pairs manually created to cover areas where there wasn't sufficient information.

This release also includes "NS", or "No Scale" models, which are a better representation of my initial goal with StarSample, and (StarSample V2.0 NS) should provide great 1x restoration results with little apparent artifacting, even where the heavier 2x models can fail due to having to increase resolution.

- 2x StarSample V2.0 HQ — _(HAT-L)_ — **THIS MODEL**

- 2x StarSample V2.0 — _(ESRGAN)_

- 2x StarSample V2.0 Lite — _(SPAN-S)_

- 1x StarSample V2.0 NS — _(ESRGAN)_

- 1x StarSample V2.0 Lite NS — _(SPAN-S)_

Github Release

SPAN

2x

StarSample V2.0 Lite

by .derpy.

This is a model for the restoration of My Little Pony: Friendship is Magic, however it also works decently well on similar art.

V2.0 greatly improves upon V1.0's dataset in every way, taking models from (realistically) only being viable at 1x, to now being far more competent at 2x, more so for the models trained with heavier architectures in this release.

Improvements come as a significantly better understanding of compressions, and partly architecturally/partly dataset improved handling of details and overall understanding of content, leading to less artifacting and "AI smudging". The dataset takes from a larger variety of sources, despite being smaller than V1.0 (when tiled V1.0 would be 71,876 pairs), due to being filtered for IQA scores and detail density. It also contains many thousands of image pairs manually created to cover areas where there wasn't sufficient information.

This release also includes "NS", or "No Scale" models, which are a better representation of my initial goal with StarSample, and (StarSample V2.0 NS) should provide great 1x restoration results with little apparent artifacting, even where the heavier 2x models can fail due to having to increase resolution.

- 2x StarSample V2.0 HQ — _(HAT-L)_

- 2x StarSample V2.0 — _(ESRGAN)_

- 2x StarSample V2.0 Lite — _(SPAN-S)_ — **THIS MODEL**

- 1x StarSample V2.0 NS — _(ESRGAN)_

- 1x StarSample V2.0 Lite NS — _(SPAN-S)_

Github Release

ESRGAN

1x

Archiver AntiLines

by Loganavter

A specialized model from the Archivist suite, designed to remove linear artifacts. It excels at eliminating horizontal lines that other denoisers often mistake for part of the line art.

This model is optimized for input resolutions between 720p and 1080p. Using it on significantly different resolutions may produce suboptimal results.

All Archivist models are trained on a custom dataset generated by a physics-based degradation simulator. Recommended Workflow: Use Archivist to fix physical defects, then pass the result through DRUNet (low strength) to stabilize.

ESRGAN

1x

Archiver Medium

by Loganavter

A general-purpose model from the Archivist suite, providing a balanced approach to removing common film grain and dirt while preserving the original drawing texture. It is the recommended starting point for most footage.

This model is optimized for input resolutions between 720p and 1080p. Using it on significantly different resolutions may produce suboptimal results.

All Archivist models are trained on a custom dataset generated by a physics-based degradation simulator. Recommended Workflow: Use Archivist to fix physical defects, then pass the result through DRUNet (low strength) to stabilize.

ESRGAN

1x

Archiver RGB

by Loganavter

A specialized model from the Archivist suite, specifically tuned for tackling heavy chromatic (color) noise and severe color channel degradation, often seen in Metrocolor films. Note: its capabilities overlap with the Rough model, but it is better suited for color-based artifacts.

This model is optimized for input resolutions between 720p and 1080p. Using it on significantly different resolutions may produce suboptimal results.

All Archivist models are trained on a custom dataset generated by a physics-based degradation simulator. Recommended Workflow: Use Archivist to fix physical defects, then pass the result through DRUNet (low strength) to stabilize.

ESRGAN

1x

Archiver Rough

by Loganavter

An aggressive restoration model from the Archivist suite for severely degraded footage. It attempts to reconstruct heavily damaged or lost details through hallucination. Note: its capabilities overlap with the RGB model, but it focuses more on structural integrity than color noise.

This model is optimized for input resolutions between 720p and 1080p. Using it on significantly different resolutions may produce suboptimal results.

All Archivist models are trained on a custom dataset generated by a physics-based degradation simulator. Recommended Workflow: Use Archivist to fix physical defects, then pass the result through DRUNet (low strength) to stabilize.

ESRGAN

1x

Archiver Soft

by Loganavter

A light-touch restoration model from the Archivist suite, designed for high-quality sources. It focuses on gentle denoising while preserving the original film grain aesthetic. CAVEAT: In some scenarios, a standard DRUNet might yield subjectively better results. Always compare on your specific footage.

This model is optimized for input resolutions between 720p and 1080p. Using it on significantly different resolutions may produce suboptimal results.

All Archivist models are trained on a custom dataset generated by a physics-based degradation simulator. Recommended Workflow: Use Archivist to fix physical defects, then pass the result through DRUNet (low strength) to stabilize.

Compact

1x

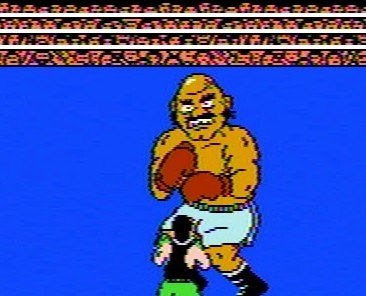

NES-Composite-2-RGB-Small

by pokepress

Takes composite/RF/VHS NES footage and attempts to restore it to RGB quality. Assumes footage has been properly deinterlaced via field duplication from 240p to 480p/720p/etc. Note that:

* All footage was captured in 240p/480p/720p NTSC.

* RGB footage was captured via an AV Famicom with the RGB Blaster via the Retrotink 2x or GBS Control.

* The model was trained exclusively on individual frames, so it can't fix things like dropouts.

* The even and odd fields of NES composite tend to be a bit...different from each other, so there will be some jitter at 60fps.

* I don't have access to an NES Toploader, so I wouldn't expect it to fix the jailbars very well.

Compared to the OmniSR variant of this model, this version runs more quickly (despite the larger model file size), and should do a good job on footage captured directly from the console using composite. If your footage is from a VHS tape or was captured over RF, the slower OmniSR model is probably a better choice.

Revision History:

* 1.5.0 (09/17/2025): Added "Small" version of model

Compact

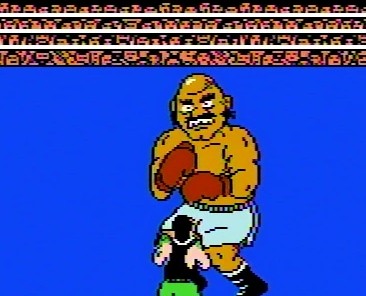

1x

Sega Genesis Cleanup-Small

by pokepress

Takes composite/RF/RGB/VHS Genesis footage and attempts to restore it to RGB quality. Assumes footage has been properly deinterlaced via field duplication from 240p to 480p/720p/etc. Note that:

* All footage was captured in 240p/480p/720p NTSC.

* Ground truth RGB footage was captured via a triple-bypassed Model 2 Genesis with new capacitors via the Retrotink 2x or GBS Control. Other footage was captured from various Model 1 Genesis consoles (with and without the "High Definition Graphics" mark).

* The model was trained exclusively on individual frames, so it can't fix things like dropouts.

* Should help fix color, blur, and jailbars.

* Cannot un-dither fully blended pixels.

Compared to the OmniSR variant of this model, this version runs more quickly (despite the larger model file size), and should do a good job on footage captured directly from the console using composite or RGB. If your footage is from a VHS tape or was captured over RF, the slower OmniSR model is probably a better choice.

Revision History:

1.0.0 (09/16/2025): Initial release.